Lifestyle

Will Tesla Autopilot Have an Ethical Component?

Should autonomous driving algorithms take ethical considerations into account? Yes, say researchers in a new study and the MIT Technology Review.

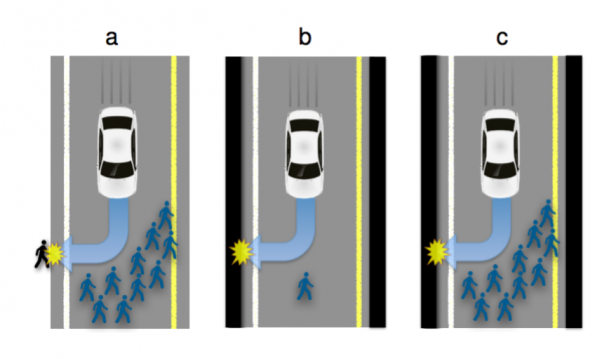

Fast forward a few years and you find your self-driving Tesla rounding a corner, only to find 10 pedestrians in the road ahead. There are walls on either side of the road that will kill or seriously injure you if your car crashes into them. How should the autonomous driving algorithm handle that situation?

Three researchers — Jean-Francois Bonnefon of the Department of Management Research at the Toulouse School of Economics, Azim Shariff of the Department of Psychology at the University of Oregon, and Iyad Rahwan of the Media Laboratory at the Massachusetts Institute of Technology posed that question to hundreds of people using Amazon’s Mechanical Turk, an online crowdsourcing tool.

According to a report published on Arxiv, most people are willing to sacrifice the driver in order to save the lives of others. 75% thought the best ethical solution was to swerve, but only 65% thought cars would actually be programmed to do so. Not surprisingly, the number of people who said the car should swerve dropped dramatically when they were asked to place themselves behind the wheel, rather than some faceless stranger.

“On a scale from -50 (protect the driver at all costs) to +50 (maximize the number of lives saved), the average response was +24,” the researchers wrote. “Results suggest that participants were generally comfortable with utilitarian autonomous vehicles (AVs), programmed to minimize an accident’s death toll,” according to a report on IFL Science.

The legal issues presented by this research could not be more complex. In theory, legislators and regulators could require that autonomous driving algorithms include an unemotional “greater good” ethical component. But what if those laws or regulations allow manufacturers to offer various levels of ethical behavior? If a buyer knowingly chooses an option that provides less protection for innocent bystanders, will that same buyer then be legally responsible for what the car’s software decides to do?

“It is a formidable challenge to define the algorithms that will guide AVs confronted with such moral dilemmas,” the researchers wrote. “We argue to achieve these objectives, manufacturers and regulators will need psychologists to apply the methods of experimental ethics to situations involving AVs and unavoidable harm.”

An article in the MIT Technology Review entitled “Why Self-Driving Cars Must Be Programmed to Kill,” argues that because self-driving cars are inherently safer than human drivers, that in and of itself creates a new dilemma. “If fewer people buy self-driving cars because they are programmed to sacrifice their owners, then more people are likely to die because ordinary cars are involved in so many more accidents,” the MIT article says. “The result is a Catch-22 situation.”

In the summary to their study, the researchers argue, “Figuring out how to build ethical autonomous machines is one of the thorniest challenges in artificial intelligence today. As we are about to endow millions of vehicles with autonomy, taking algorithmic morality seriously has never been more urgent.”

Related Autopilot News

- Tesla Autopilot emergency saves driver from head-on collision

- Who is responsible when Tesla Autopilot results in a crash?

- Upcoming Tesla Autopilot 1.01 will have several new improvements

- Tesla Building Next Gen Maps through its Autopilot Drivers

Lifestyle

Elon Musk seemingly confirms Cybertruck gift to 13-year-old cancer fighter

Diagnosed in 2018 with a rare form of brain and spine cancer with no cure, the teen has undergone 13 surgeries by the time he was 12.

Elon Musk has seemingly confirmed that he will be sending a Tesla Cybertruck to 13-year-old Devarjaye “DJ” Daniel, a 13-year-old Houston boy fighting brain cancer. The teen was recognized as an honorary Secret Service member by U.S. President Donald Trump during his address to Congress on Tuesday.

A Chance Meeting

The Tesla CEO’s Cybertruck pledge was mentioned during DJ’s short interview with CNN’s Kaitlan Collins. When Collins asked the 13-year-old what he told the Tesla CEO, DJ answered that he asked for a Cybertruck.

“I said, ‘can you do me a big favor, when you get back to Houston can you send us a Cybertruck down there?’” the cancer fighter stated.

Daniel noted that Musk responded positively to his request, which was highlighted by Collins in a post on X. Musk responded to the post with a heart emoji, suggesting that he really will be sending a Cybertruck to the 13-year-old cancer fighter.

Teen’s Cancer Battle Inspires

Diagnosed in 2018 with a rare form of brain and spine cancer with no cure, Daniel has undergone 13 surgeries by the time he was 12. During his speech, Trump highlighted the 13-year-old’s long battle with his disease.

“Joining us in the gallery tonight is a young man who truly loves our police. The doctors gave him five months at most to live. That was more than six years ago. Since that time, DJ and his dad have been on a quest to make his dream come true,” Trump stated.

Daniels officially received an honorary badge from U.S. Secret Service Director Sean Curran, to much applause during the event.

Surprisingly Partisan

While Daniels’ story has been inspiring, Trump’s focus on the 13-year-old cancer fighter has received its own fair share of criticism. MSNBC host Nicolle Wallace, while referencing Daniels’ love for law enforcement, noted that she is hoping the 13-year-old never has to defend the U.S. capitol against Trump supporters. “If he does, I hope he isn’t one of the six who loses his life to suicide,” Wallace stated.

Anti-Musk and Trump accounts on X have also thrown jokes at the cancer fighter’s honorary badge, with some dubbing the 13-year-old as a “DEI hire” that should be looked into by DOGE.

Lifestyle

Tesla owner highlights underrated benefit of FSD Supervised

Elon Musk has been pretty open about the idea of FSD being the difference maker for Tesla’s future.

If Tesla succeeds in achieving FSD, it could become the world’s most valuable company. If it doesn’t, then the company would not be able to reach its optimum potential.

FSD Supervised’s safety benefits:

- But even if FSD is still not perfect today, FSD Supervised is already making a difference on the roads today.

- This was highlighted in Tesla’s Q4 2024 Vehicle Safety Report.

- As per Tesla, it recorded one crash for every 5.94 million miles driven in which drivers were using Autopilot technology.

- For comparison, the most recent data available from the NHTSA and FHWA (from 2023) showed that there was one automobile crash every 702,000 miles in the United States.

This morning, Tesla FSD proved to be an absolute godsend. I had to take my brother-in-law to the hospital in Sugar Land, TX, which is 40 miles away, at the ungodly hour of 4 AM. Both of us were exhausted, and he was understandably anxious about the surgery.

— JC Christopher (@JohnChr08117285) January 29, 2025

The convenience of…

FSD user’s tale:

- As per an FSD user’s post on social media platform X, FSD Supervised was able to help him drive a relative to a medical facility safely even if he was exhausted.

- During the trip, the driver only had to monitor FSD Supervised’s performance to make sure the Tesla operated safely.

- In a vehicle without FSD, such a trip with an exhausted driver would have been quite dangerous.

- “This morning, Tesla FSD proved to be an absolute godsend. I had to take my brother-in-law to the hospital in Sugar Land, TX, which is 40 miles away, at the ungodly hour of 4 AM. Both of us were exhausted, and he was understandably anxious about the surgery.

- “The convenience of sending the hospital’s address directly from my iPhone to my Tesla while still inside my house, then just a single button press once inside, and 40 miles later we were precisely in front of the hospital’s admissions area.This experience really underscores just how transformative this technology can be for society,” Tesla owner JC Christopher noted in his post.

Don’t hesitate to contact us with news tips. Just send a message to simon@teslarati.com to give us a heads up.

Lifestyle

Tesla Optimus “stars” in incredible fanmade action short film

There are few things that prove an enthusiast’s love towards a company more than a dedicated short film. This was highlighted recently when YouTube’s SoKrispyMedia posted a 10-minute action movie starring Optimus, Tesla’s humanoid robot, as well as several of the company’s most iconic products.

The video:

- Shot like a Hollywood action flick, the video featured a rather humorous plot involving a group of thieves that mistakenly targeted a Tesla Model 3 driver.

- The Model 3 driver then ended up speaking to Tesla for assistance, and some high-octane and high-speed hijinks ensued.

- While the short film featured several Tesla products like the Model 3, Superchargers, and the Cybertruck, it is Optimus that truly stole the show.

- Optimus served several roles in the short film, from an assistant in a Tesla office to a “robocop” enforcer that helped out the Model 3 driver.

Future Robo-cop @Tesla_Optimus

— SOKRISPYMEDIA (@sokrispymedia) January 12, 2025

full video: https://t.co/TXpSRhcP5K pic.twitter.com/YFHZ7siAP7

Cool inside jokes:

- The best Tesla videos are those that show an in-depth knowledge of the company, and SoKrispyMedia definitely had it.

- From the opening scenes alone, the video immediately poked fun at TSLA traders, the large number of gray Tesla owners, and the fact that many still do not understand Superchargers.

- The video even poked fun at Tesla’s software updates, as well as how some Tesla drivers use Autopilot or other features without reading the fine print in the company’s release notes.

- The video ended with a tour de force of references to Elon Musk products, from the Tesla Cybertruck to the Boring Company Not-a-Flamethrower, which was released back in 2018.

Check out SoKrispyMedia’s Tesla action short film in the video below.

Don’t hesitate to contact us with news tips. Just send a message to simon@teslarati.com to give us a heads up.

-

News2 weeks ago

News2 weeks agoTesla rolls out new, more affordable trim of the Model Y Juniper in U.S.

-

News2 weeks ago

News2 weeks agoTesla expands Early Access Program (EAP) for early Full Self-Driving testing

-

News2 weeks ago

News2 weeks agoTesla celebrates key milestone for 4680 battery cell production cost

-

Investor's Corner2 weeks ago

Investor's Corner2 weeks ago“Nothing Magnificent about Tesla (TSLA),” claims Jim Cramer

-

News1 week ago

News1 week agoI took a Tesla new Model Y Demo Drive – Here’s what I learned

-

News2 weeks ago

News2 weeks agoTesla Europe shares FSD test video weeks ahead of launch target

-

News2 weeks ago

News2 weeks agoThis Tesla executive is leaving the company after over 12 years

-

Elon Musk2 weeks ago

Elon Musk2 weeks agoTesla is building a new UFO-inspired Supercharger in the heart of Alien country